The Digital Tongue: Exploring the Legacy of Speech Synthesis in Arcades

Speech synthesis in arcade games was an integral yet often overlooked aspect of video game history. It represents a blend of entertainment technology and computational linguistics. Tracing its roots back to the early 1980s developers began experimenting with the inclusion of human-like voices in games. The earliest implementations were basic, constrained significantly by the hardware limitations of the time.

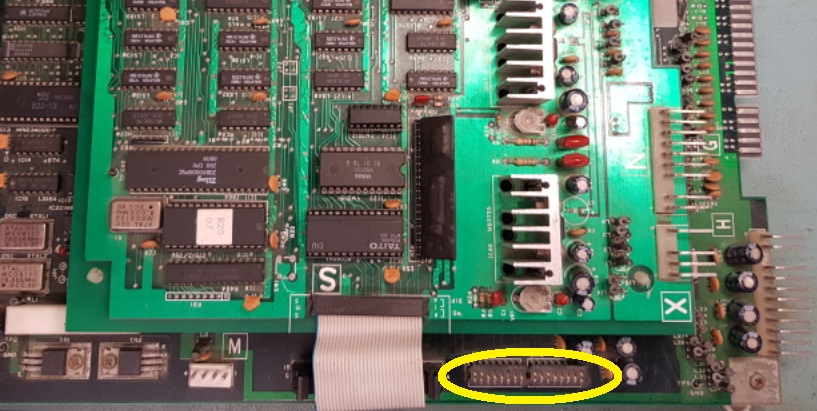

Stratovox by Taito was the first arcade game to integrate speech synthesis in 1980. In the same year, Berserk introduced a distinctively robotic voice. By 1982, Q*bert was using a speech synthesis chip to generate an alien language by playing sequences of random sounds through the chip, illustrating an early example of linguistic creativity in gameplay. Sinistar was the first arcade game to use recorded digital audio samples in 1983, also followed in the same year by ‘Punch Out’ to be the first sports game to feature spoken commentary. The introduction of laser disc based arcade games with Dragons Lair (also in 1983) effectively solved the memory issues present in the hardware of the time and took sampled audio to it’s natural conclusion with full recorded narration (echoed much later with PC CD-ROM releases such as 7th Guest).

Initially, however, developers needed to be much more creative with how they generated speech. Linear Predictive Coding (LPC) was one of the foremost methods employed for speech synthesis. This technique involved decomposing human speech into basic sounds and reproducing these sounds through algorithms. Early arcade machines faced severe memory constraints, which limited the complexity and length of speech sounds they could generate. Despite these challenges, there was a gradual evolution in the field. Improved algorithms emerged, allowing for more natural-sounding speech, and increased memory capacities in machines facilitated longer and more varied speech capabilities.

The mechanics of speech synthesis in these arcade games involved initially using simple waveforms to mimic the human voice, followed by digital sampling in later developments. In digital sampling, actual human voices were recorded, digitized, and then played back in the game. This technology was not just a technical showpiece but was integrated into gameplay for various purposes such as narration, providing character voices, and delivering game alerts.

However, the journey was fraught with challenges. Early machines had to constantly balance the quality of speech synthesis with other gaming functions due to limited memory and processing power. Achieving clear and understandable speech was a significant hurdle. To overcome these, some games used specialized hardware chips designed specifically for speech synthesis, and developers employed creative software optimizations to compress speech data without compromising clarity.

The impact of speech synthesis in arcade games enhanced player engagement by adding a new level of immersion and interaction, it pushed technological advancements in gaming and computational linguistics, and left a cultural imprint with iconic phrases from these games entering popular culture. The evolution of speech synthesis is a remarkable chapter in the history of arcade technology. It showcases the creativity and resilience of early game developers and set the stage for the sophisticated voice acting we see in modern gaming today. With speech synthesis making somewhat of a renaissance in the era of generative AI and text based speech synthesis, i don’t think it will be the last time we see artificially generated speech in computer games. When you see it in future games, and you will, remember where it all began.